Protect Your Pod: Understanding Kubernetes Security and Why OpenAI is Down

It's finally time to understand the power (and danger) of one of the key services used to power Google, Uber, and OpenAI

TL;DR

Define Kubernetes, discusses its unique challenges and architecture, and explores why it generates false positives

We also breakdown the four Cs of Kubernetes security

Kubernetes

Kubernetes has revolutionized how organizations (including OpenAI) deploy and manage applications, yet its security remains one of the most challenging aspects of modern infrastructure. This comprehensive guide examines Kubernetes through a security lens, revealing why traditional security approaches fall short and how teams can build effective defenses in containerized environments.

Kubernetes presents a fundamental contradiction: the very features that make it powerful for application deployment create significant security challenges. Organizations have embraced Kubernetes for its ability to accelerate software delivery, yet security teams often struggle to keep pace with its dynamic nature.

The platform's ephemeral containers, automated scaling, and interconnected services create an environment where traditional security monitoring generates overwhelming noise. Real threats become needles in haystacks of false alerts, leaving organizations vulnerable to sophisticated attacks. That presents Kubernetes as an inviting entry point for attacks including data theft, distributed denial-of-service (DDoS), cryptojacking, and beyond.

This guide will examine how to minimize Kubernetes risks. We’ll explain Kubernetes, its unique challenges, its architecture, and how it can produce false positives. This will include a primer on the four Cs of Kubernetes security (code, container, cluster, and cloud).

Demystifying Kubernetes: An Orchestra Conductor for Applications

To understand Kubernetes (aka K8s, the eight letters between “K” and “s”) plays in modern software, we’ll use a cargo shipping analogy. Modern shipping relies on standardized containers that enable seamless handling, transport, and transfer across ships, trucks, trains, and planes - all without changing the container or the goods inside.

Software containers apply this same concept to applications. Developers bundle their code alongside all dependencies—frameworks, libraries, and runtime environments—into portable units. These containers deploy consistently across different infrastructure, from local servers to cloud platforms, adapting instantly to shifting operational demands.

Applying our cargo shipping analogy to containerized application deployment means that:

The goods being shipped are applications.

The shipping containers they’re loaded in are—no surprise—containers.

The port manager is Kubernetes.

The global shipping network is the cloud.

As a container orchestration platform, Kubernetes helps build, manage, and run cloud-native applications by abstracting the complexity of handling the thousands of containers that can be running at a time. DevOps teams use Kubernetes to:

Deploy containers, move them across hybrid environments, and create or destroy them as needed for scalability.

Connect containers with each other and with other networked services and resources.

Ensure high availability by monitoring container health and restarting or replacing failing containers.

That’s the good news. However, there’s also security to consider—which is where things get complicated.

The unique challenges of Kubernetes security

Given its role in orchestrating communications and moving resources across the network, Kubernetes is a powerful technology with a high potential for misuse. To cite just a few examples:

Cryptojacking. Threat actors frequently breach Kubernetes clusters and hijack their cloud resources to mine cryptocurrency. A successful high-profile attack on Tesla is just one example of this method. These attacks can significantly degrade performance and increase costs.

Data theft. Compromised containers expose stored credentials—passwords, API keys, tokens, certificates. Attackers leverage these secrets for lateral movement and persistent cluster access.

Distributed denial-of-service (DDoS). Kubernetes' auto-scaling becomes a weapon for generating massive traffic volumes. Compromised clusters join botnets or directly attack targets by exhausting their resources.

Backdoor creation. Attackers manipulate Kubernetes’ role-based access control (RBAC) to create admin accounts, ensuring continued access even after patching the original breach.

Supply chain attacks. Attackers inject malicious code into the components used to build containerized applications. When these images are pulled and deployed within a Kubernetes cluster, the malware can take advantage of its interconnectedness to move easily through the environment.

Network attacks. Vulnerabilities in cluster networking can allow attackers to intercept, modify, or manipulate traffic to disrupt services or gain unauthorized access.

Container runtime attacks. Attackers can use misconfigurations or vulnerabilities in the container runtime to bypass security controls, gain elevated permissions, or access the host system to abuse its resources.

Concerns like these aren’t just theoretical—they have real impacts on businesses. The 2024 Red Hat State of Kubernetes Security Report found that 67% of surveyed organizations had delayed or slowed deployment due to Kubernetes security concerns, while 46% had lost revenue or even customers due to a container or Kubernetes security incident.

Kubernetes security challenges stem from the platform's inherent complexity and constant change. The modular architecture that enables rapid development and deployment also creates multiple entry points for attackers across every layer of the system that is constantly changing.

Unrestricted communication can allow unlimited abuse

Kubernetes permits all pod-to-pod communication by default. Without explicit network policies, one breached container can access every service, database, and API in the cluster. This zero-trust violation means attacks spread in seconds, not hours.

Constant change undermines visibility

Containers live for minutes or hours, then vanish—taking logs, forensic data, and attack traces with them. Traditional security tools expect persistent infrastructure. In Kubernetes, the crime scene disappears before investigation begins. Along the way, patches and changes get deleted as well. In any system, security is a moment in time, not a steady state—and that’s especially true in Kubernetes.

Given this changing landscape, monitoring and logging are the foundation of Kubernetes security. Containers are continuously spinning up, moving, communicating, and interacting with network resources. SecOps teams need continuous visibility and insight to detect anomalies, respond quickly to potential attacks, and remediate vulnerabilities.

But maintaining visibility into a dynamic Kubernetes environment is much more difficult than in a traditional software architecture, or even a cloud environment. You can’t just rely on cloud audit logs to detect Kubernetes events—you have to look at the Kubernetes cluster and API server logs directly. And that’s a noisy experience.

A single application deployment triggers hundreds of events across these systems. Distinguishing attacks from normal operations becomes nearly impossible when every action looks suspicious.

Understanding Kubernetes architecture

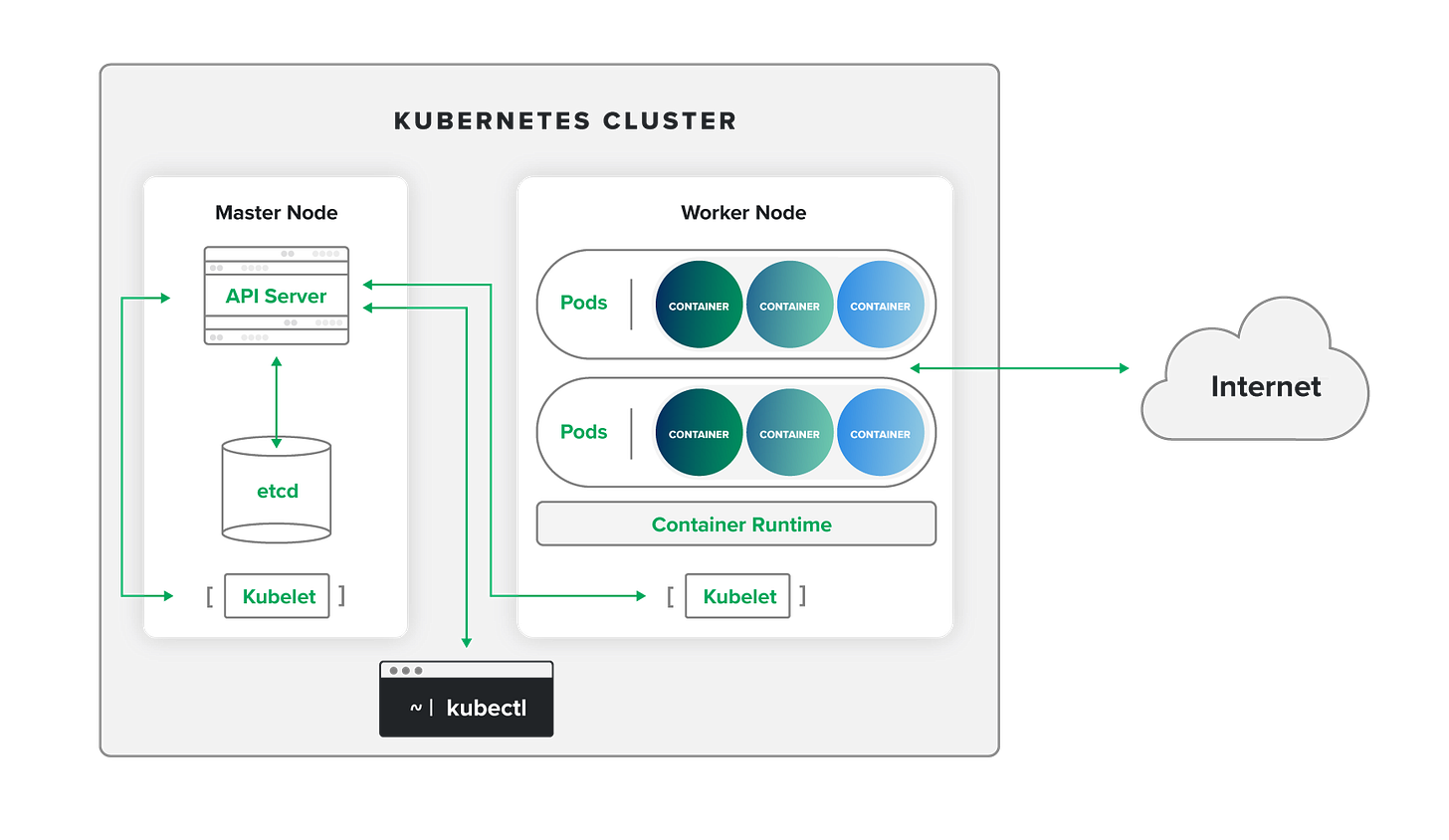

Understanding Kubernetes security requires mapping its architecture. Each component presents unique monitoring challenges and attack surfaces:

Cluster

A Kubernetes cluster is a set of machines that run containerized applications and manage their deployment, scaling, and operations. Using our cargo shipping analogy, the cluster is the entire port. Within the cluster, the master node (central operations hub) and worker nodes (individual docks) work together to handle containerized cargo.

The cluster’s supervising role makes it a prime attack target. Through its compromise, attackers can gain tremendous freedom to misuse the containerized applications it runs—as well as the infrastructure it resides on and the network where it’s operating.

Master node

The master node of a cluster hosts the control plane, manages its overall state, facilitates communication, and keeps the cluster running smoothly. In a shipping port, the master node would be the central operations hub where decisions are made about docking, activity coordination, and adherence to port standards and policies.

API server

The API server runs on the master node and serves as the frontend and central communication hub for the cluster. It manages information and processes deployment requests. In a shipping port, the API server would be the team in the control tower managing all incoming and outgoing messages among different docks, ships, cranes, shipping companies, and personnel.

For security architects, the API server is the most important component to focus on. A misconfigured API server can allow threat actors to gain unauthenticated access, escalate privileges, move laterally, and bypass security controls. It also provides access to the secrets in the etcd database (such as passwords, tokens, API keys, and certificates), which are stored unencrypted by default.

Kubectl

DevOps teams use kubectl to interact with Kubernetes clusters. Providing a user-friendly interface, kubectl translates user commands into API requests and formats API responses for human readability. This makes it simpler for developers to deploy applications, inspect resources, manage cluster operations, and troubleshoot. Kubectl is like the communications team that port operators rely on to relay instructions to the control tower.

Worker node

Controlled by the master node, a worker node runs the workloads and manages the resources and services for the containers assigned to it. This includes pod networking, service proxying, storage, monitoring, and logging. In a shipping port, the worker node would be an individual dock handling the cargo for a given ship.

Pods

A pod is the smallest deployable unit in a Kubernetes cluster. It consists of a group of related containers that run a process sharing the same network and storage resources. Pods are hosted by worker nodes and defined via YAML files, which identify the container(s) that each pod should run. Using our port analogy, a pod is a group of cargo shipping containers that belong to the same shipment.

Kubelet

Similar to the way the master node manages the entire cluster, a kubelet oversees an individual worker node and ensures that its containers are running correctly. The kubelet on each node reports back to the master node and receives instructions in return to start, stop, or modify containers. In a shipping port, kubelets would be the dock managers facilitating the correct loading and unloading of cargo.

Container runtime

While the kubelet receives instructions for managing the execution and lifecycle of containers, the container runtime executes these processes. This can include loading container images, starting and stopping containers, and ensuring they have the necessary resources and isolation. In a port, the container runtime would be the crew of dock workers doing the hands-on work.

etcd

etcd is the key-value store used to maintain the state and configuration of a cluster. It holds details about nodes, pods, configurations, and secrets. You can think of etcd as the shipping port’s central database, tracking the status of its ships, cargo, and docks.

As we survey the bustling port of our Kubernetes environment—so many moving parts, so much yelling back and forth—we clearly see the challenges of Kubernetes monitoring. There may well be genuine indicators of compromise (IOCs) or attack (IOAs) among all that noise. But the roar of false positives will likely drown them out.

Why Kubernetes monitoring produces so many false positives

False positives in a Kubernetes environment can have significant consequences for the business, including:

Overwhelmed and exhausted SecOps teams, who can lose focus on real incidents within the Kubernetes environment and elsewhere in the organization.

Failed detection of suspicious activities, performance issues, or potential security breaches, leading to damage that might have been preventable.

Difficulty gathering accurate and relevant logging data, making it hard to conduct post-incident forensics, comply with regulatory requirements, and identify root causes of security incidents.

Ineffective visibility into Kubernetes security incidents that may slow development. This can undermine the agility and flexibility promised by cloud-native development, and block business goals as a result.

Why does Kubernetes generate such a tremendous volume of false positives? It comes down to several factors intrinsic to the architecture of container-based applications.

Continuous change

The constant creation and destruction of containers can lead to alerts for pods that are simply being replaced as part of normal operations—or for transient issues that quickly resolve themselves with no need for attention.

Complex interdependencies

As we’ve seen, Kubernetes clusters encompass many interconnected components and services. A minor issue in one component can cascade and trigger multiple alerts across the system, even if the root cause is benign.

Resource fluctuations

Kubernetes monitoring tools often lack the context needed to understand application-specific behaviors such as auto-scaling activity, or short spikes in CPU or memory usage triggered by legitimate workloads.

Default configurations

Kubernetes is a younger technology, and its monitoring tools can be less refined than mature cloud monitoring solutions. In an excess of caution, vendors often set preconfigured alert thresholds at a level that errs on the side of false positives rather than false negatives.

The four Cs of Kubernetes security: code, container, cluster, and cloud

Defense in depth depends on multi-layered security. The 4C security model is designed to protect cloud-native applications running in containerized environments—like Kubernetes—by focusing on each layer of the architecture.

Code

As in any type of application development, automated security testing—both static (SAST) and dynamic (DAST)—is essential to uncover vulnerabilities in code before deploying it in a Kubernetes environment. Other best practices include auditing your software supply chain to remove vulnerable or unsupported dependencies, and ensuring a manual code review stage before software enters production.

Code-level Kubernetes security also includes two other requirements specific to this type of environment:

Scanning for hardcoded secrets. Developers occasionally commit Kubernetes secrets, like API tokens, into source code. If they’re stored as plain text, they’re easily discoverable in an attack or inadvertently exposed in a version control system. They’re also hard to update or rotate regularly, and often fail compliance standards for the protection of sensitive data.

Scanning for YAML security risks. Developers use YAML files as declarative manifests for the Kubernetes resources within a cluster. These files define attributes such as API version, kind of resource, metadata, and specifications. Applying the principle of infrastructure-as-code to Kubernetes, YAML files make it easier to replicate configurations across different deployments and to automate cluster management. However, misconfigurations in a YAML file will be replicated as well. This will multiply any vulnerabilities across a company’s hybrid environment. To prevent this, developers should scan YAML files to identify any potential security risks before deploying the files to a cluster.

Container

Container-level Kubernetes security includes measures to protect the Kubernetes environment from vulnerabilities within the container, and to protect the container from vulnerabilities in the Kubernetes environment.

Container image scanning. Container images are a prime attack vector. Threat actors can exploit any vulnerabilities, malware, or misconfigurations these images contain. Developers should pass container images through vulnerability scanners prior to deployment as part of the CI/CD pipeline.

Host hardening. The security of both containers and the Kubernetes cluster depends on the security of the underlying hosts. If a host is compromised, the attacker can gain access to all of the containers running on it, and even the entire cluster. Standard hardening practices such as applying software updates and security patches, minimizing running processes, and implementing strong authentication and least privilege are essential.

Minimizing software packages in the golden/base container image. With fewer installed packages, there are fewer potential vulnerabilities for an attacker to exploit. Starting from a minimal base also gives developers more control over image contents, and smaller images can complete vulnerability scans more quickly.

Container image signing. Digitally signing container images establishes a chain of trust from the developer to the runtime environment. Users can prevent malicious code or unauthorized changes from reaching production by verifying the source and ensuring the integrity of each image prior to deployment.

Cluster

The complexity and flexibility of Kubernetes make it easy for misconfigurations, default settings, and other vulnerabilities to go unnoticed. A comprehensive approach to cluster-level security includes the following best practices:

Cluster configuration. Misconfigurations in a Kubernetes cluster can be deadly. For example, a compromised API server can be a gateway for incidents such as breaching the sensitive data stored in the cluster, deploying malicious workloads, manipulating cluster resources, and launching a DDoS attack. An exposed Kubelet API that allows anonymous, unauthenticated requests can give attackers access to nodes, secrets, or full control over clusters. Well-defined, consistently enforced standards for cluster configuration can help mitigate such risks.

Role-based access control (RBAC). RBAC plays a key role in Kubernetes security by controlling access to the Kubernetes API and other cluster resources. By defining fine-grained permissions, enforcing least privilege, and setting up a different RBAC user for each integration, you can help prevent unauthorized access and limit the impact of compromised credentials.

Namespace isolation. While not a security boundary itself, logically separating the resources within a cluster makes it possible to define resource-specific RBAC and networking policies across pods and namespaces.

Secure computing mode (seccomp). Seccomp is a key Kubernetes security feature that restricts the system calls (syscalls) a containerized application can make to the kernel. Only allowing authorized syscalls to pass through significantly reduces the risk of kernel-level exploits and container breakout scenarios, where a threat actor uses a container vulnerability to gain access to the broader network.

Continuous patching. In Kubernetes, patching takes a different form than in a traditional architecture. Instead of being applied to running containers, the latest security patches are regularly applied to base container images in the registry. These updated images are then used to rebuild and redeploy containers as part of the regular container lifecycle, without the need for downtime.

Proper logging. Event logging in a Kubernetes cluster is essential for security monitoring, compliance, and forensic analysis. API server audit logs capture details of messages and requests within the cluster, including user identities, resources accessed, and operations performed. Node-level logs provide insights into container operations, and they help detect issues like unauthorized access attempts or container breakouts. Pod-level logs can reveal security issues within containerized applications.

Admission controller configuration. Admission controllers act as gatekeepers for the API server, intercepting and processing authorized requests to the cluster. DevOps teams can set policies and rules to ensure that these requests meet security policies and compliance requirements, prevent privileged or vulnerable containers from running, and ensure appropriate limits on resource consumption.

Secrets hygiene. As mentioned earlier, hardcoded secrets pose many security issues. Developers should also avoid storing secrets in environment variables or configuration files, which can be easily accessed and exposed. Storing inactive secrets in an external secret management system lets you protect them through encryption at rest and fine-grained access control. Like any password, you should rotate secrets regularly to reduce the risk of long-term exposure.

Cloud

Cloud-native applications run on cloud infrastructure, so any breach of a Kubernetes environment can potentially spread throughout the target’s cloud resources and network. Cloud-level Kubernetes security practices focus on limiting access and enforcing isolation between Kubernetes and its host.

Least privilege. As a fundamental tenet of zero-trust security, the organization should already be enforcing the principle of least privilege throughout its environment. For Kubernetes, this includes creating the fewest possible user accounts, each limited to role-essential permissions.

Network access. By default, Kubernetes allows unrestricted interaction between its components. Organizations should configure network policies to block all communications by default, and allow only necessary connections among pods, clusters, and the broader network environment.

Isolation. Enforcing isolation between cloud environments, containers, and hosts helps limit the extent of a breach and container breakout. Tools and practices mentioned above—such as RBAC, seccomp, and namespace isolation—are all important ways to ensure isolation.

Conclusion

Our deep dive into Kubernetes has unraveled both its transformative potential and the intricacies that make it a double-edged sword. We began by defining Kubernetes and shedding light on its complex architecture—an ecosystem engineered for scalability and automation but also one that presents unique challenges. These challenges, from dynamic configurations to rapid orchestration, often lead to false positives in security monitoring, complicating the task of maintaining a robust defense.

Moreover, by breaking down the four Cs of Kubernetes security—Code, Container, Cluster, and Cloud—we highlighted a framework that not only demystifies its security posture but also offers a targeted approach to mitigating risks. Understanding each “C” equips you with the insights needed to tailor your security strategy and reduce the noise of false alerts.

Ultimately, embracing Kubernetes means balancing its power with vigilant, intelligent security practices. As the landscape evolves, so too must our strategies, ensuring that while we harness the benefits of Kubernetes, we also remain steadfast in protecting our systems from emerging threats.

This post was originally published on Expel's blog (see pt. 1, 2). Reach out personally or see resources below how Expel - the leading cloud MDR - can help:

Kubernetes Mind Map: A guide to the tactics used most often during attacks in Kubernetes environments, including the Kubernetes services where attacks originate, the API calls made, and tips for investigating related incidents.

Expel MDR for Kubernetes data sheet: How Expel provides 24×7 detection and response for Amazon EKS, AKS, and GKE Kubernetes environments.

Secure your Kubernetes environment: Service information, demos, and reports on trends and strategies in cloud and Kubernetes security.